Since time out of mind, people have marveled at the mysterious Mona Lisa smile. Lovers of painting have reported for centuries that her smile changes before your eyes, as if she were a living person. These notes present a straightforward mathematical explanation of how Leonardo’s famous sfumato technique actually works.

In all most cases I abominate the phrase, but in this special case, the beauty is literally in the eye of the beholder. Below, we’ll look at what that means at a semi-mathematical level and then dissect the image algorithmically to show how it works.

I haven’t seen this explanation anywhere, but if someone else has pointed this out, please let me know.

Hamburgers

First, let me show you a bizarre illusion. My apologies if the image offends, but it’s the best example I am aware of. If you don’t see the illusion, just squint.

One of the kids sent me this image a while back, and I was fascinated. Having studied digital signal processing in my youth, it seemed pretty obvious how it probably worked, at least in principle, but I looked it up to confirm. It’s done with something called Fourier transforms of digital images that we’ll look at below.

The thesis of this piece is that Leonardo used essentially the same trick to achieve the uniquely elusive, mysterious quality of the Mona Lisa.

By using a similar technique, Leonardo’s painting presents us with two versions of the same portrait that depict significantly different emotions on the subject’s face at the same time.

Several physical factors in how we view the painting can cause shifts in which version of her face predominates in the viewer’s eye. These viewer behaviors can be very subtle and quick. Just as emotions can flow across a living face in a split second, a viewer’s behaviors that change the mix of the two images they perceive can be nearly instant.

To the observer’s eye, it doesn’t really matter whether the shift in the facial expression they are seeing originates as actual movements in the image they are viewing or results from a change in their own mechanisms of sight. Either way, the effect is a perceived shift in the expression of the subject, which gives an uncanny effect in a painted image.

If you already have the general idea of how Fourier Analysis works, please feel free to skip all the way down to the section What Does That Have to Do With Hamburgers?

A Tiny Bit of Math

It’s not necessary to understand how the underlying math. For our purposes, you only need a general idea of what the math does, not how it does it. If you want to know more, a rigorous and beautifully produced animated explanation by the incomparable Grant Sanderson can be found here: https://www.youtube.com/watch?v=spUNpyF58BY. Sanderson is surely one of the great teachers of our century.

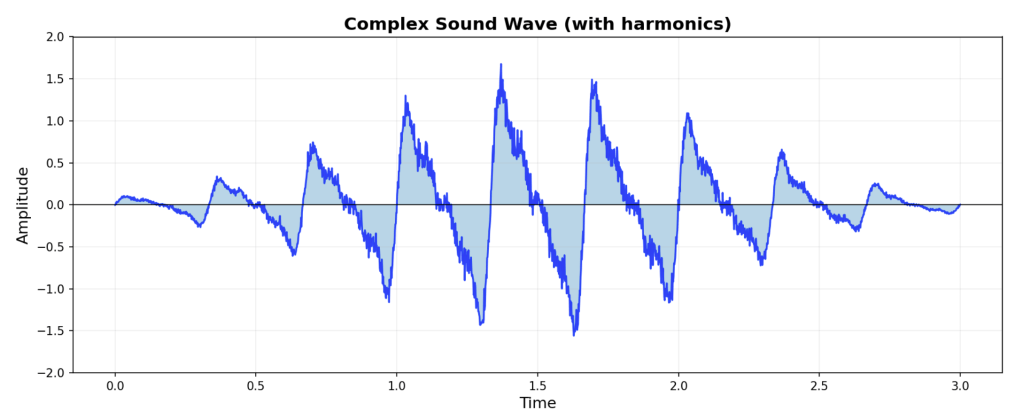

About two hundred years ago, the French mathematician Joseph Fourier discovered a remarkable truth about any signal that varies over time. Think of a sound wave, for example. The most natural way to picture a sound wave is as a wiggly line going from some starting time to some future time. Such a wave is pictured below.

Consider what the graph of the sound wave is expressing. The horizontal axis represents time moving forward, and at each instant in time, the signal, i.e., the corresponding point on the wiggly line, is somewhere on the vertical line that crosses the X axis at the given moment.

As time goes on, you get a continuous wiggle from left to right. If the signal is a sound we can hear, the wiggle will swing up and down like waves on the ocean. When the waves up and down are close together, the sound the graph illustrates is high pitched. When they are far apart, it’s low pitched. The height of the blue line at any point represents how loud the sound is at that instant.

Because the ordinary way of graphing a wave is all about the magnitude of the signal at each instant in time, we call this a time-domain representation of a wave.

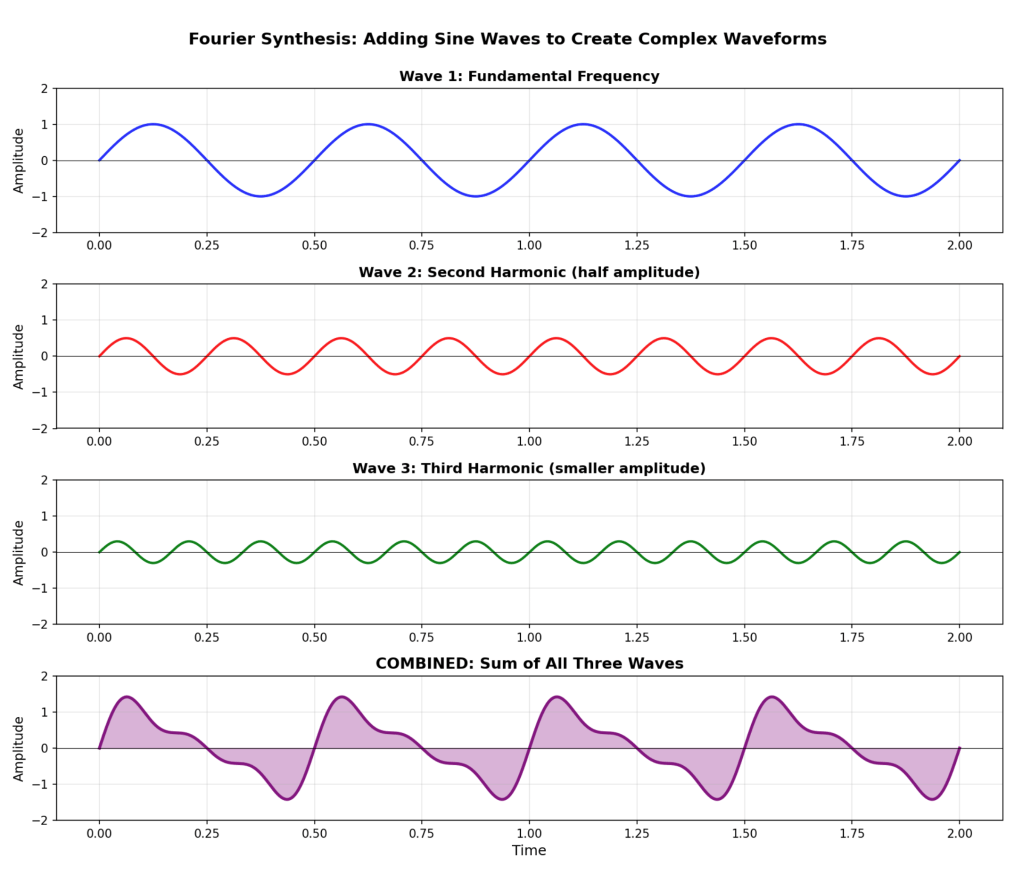

Fourier discovered that under broad and very general conditions, a signal can be represented as the sum of an infinite number of sine waves. (See picture below.) This is wildly unintuitive because sine waves are by definition uniformly repeating (see the sine waves below) but they can be combined to represent any wave form.

Because the most important property of a sine wave is its frequency, i.e., how often it repeats, this alternative way of describing a sound wave is called a frequency-domain representation of the signal.

The mathematical process for switching from the time-domain representation to the frequency domain representation is called a “Fourier transform.” We’re talking about sound as an example, but same math applies to any signal that is a wave.

The sine waves occur in time too, but they are completely regular. You don’t have to name every point on the curve to describe it, just the frequency and the magnitude. Therefore, the result of a transform is just a list of numbers saying how much of each frequency wave to add in.

Tuning forks emit sound waves that are pretty close to being perfect sine waves, so we can picture Fourier’s claim as being equivalent to saying that if you had an infinite collection of tuning forks of all different frequencies, and you hit them all at the same instant with just the right force each, you’d could hear Lincoln recite the Gettysburg Address.

This means that any sound you care to dream up can be represented as a list of numbers saying exactly how hard to hit each tuning fork.

The illustration below shows how you get the complicated wave at the bottom from adding up the three regular sine wave above it. These waves are the sounds of the tuning forks. To compute it, at each point on the X axis, you just add up the up/down values of the corresponding points for wave 1, 2, and 3.

To be able to represent absolutely any signal perfectly you would need an infinite range of sine waves, but as a practical matter, you can reduce most signals to a manageable number of sine waves that will reproduce the original signal almost exactly.

A variation on this called a discrete Fourier transform works with sampled data, which is what we usually have in engineering applications. A DFT represents a sequence of samples as a sum of a finite set of sine waves. There is much more to it, but that is as deep as we really need to go–this is about art, not signal processing.

Let’s make it concrete. We are all familiar with one application of this: cleaning up audio. Think of static in the sound from an old fashioned record. It’s very easy to erase just the pops and clicks if you represent the data in the frequency domain. Recall that the transformed data is just a list of numbers for how much input you need from each tuning fork to reproduce the original signal.

We can think of static as being extremely high frequency. (It’s a little more complicated, but we don’t really care about the math here. ) Why? Because physically it comes from hair-fine scratches or dust particles on a record that produce almost instantaneous clicks when the record player needle hits them. These events are nearly instant compared how far the needle moves down the record groove to make the much lower frequency notes we actually care about in music or speech.

To get rid of the scratches, you reduce or remove the input from the highest frequency tuning forks. Think of it as setting the strength of the taps we will give them to zero. Sounds that are that high pitched are almost never a legitimate part of the desired music signal, so who cares? We lose almost nothing by tossing them.

When you convert the sine coefficients back to the time domain, i.e., add up all the sine waves in the right amounts to get the recording back, like magic, the scratches are gone because all the super-high frequency components of the sound are gone.

Much of digital sound processing is some version of this back and forth between time-domain and frequency domain representations of the signal.

What Does That Have to Do With Hamburgers?

You can do the same thing with a two-dimensional image that we just did with one-dimensional sound. It’s the same basic math.

For instance, specks on an image are the two-dimensional equivalent of static. Removing them is essentially the same process as removing static from a sound recording. You zero out all the super high frequency components of the image.

Now, consider what would happen if you did a DFT of an image and then did the opposite of removing speckles, i.e., throw away all the values except the high frequencies. What is left represents the areas where one part of the picture abruptly changes into another, i.e., edges. That’s exactly how PhotoShop turns a photo into a line drawing. If you do the opposite, throw away the high frequencies and keep only the lower frequency components, there are no edges, just blurs.

And that is the key to turning a hamburger into Hitler and back again.

- The picture of a hamburger is transformed to the frequency domain and the low frequency components are reduced or eliminated.

- The picture of Hitler is transformed to the frequency domain and the high frequency components are reduced or eliminated.

- Then two sets of frequency components are merged, so the high frequency part of the spectrum of frequencies are hamburger, and all the low frequency parts are Hitler.

- The merged frequency-domain data is converted back to time-domain data, giving us the combined image.

The Image Transforms Because Your Eyelashes Act as a Low-Pass Filter.

When you squint, your eyeslashes act as what is called a low-pass filter. They interfere with seeing the fine-details, i.e., the high frequencies, so the hamburger largely drops out. However, the low frequencies, i.e. the larger features, tend to pass through the screen of lashes mostly intact, so the face comes through.

There are some details about choosing the actual images so they have the right visual elements in the right places, etc. but that’s the fundamental trick.

The Mona Lisa Smile

Perhaps you see where we’re going with this?

Leonardo worked three hundred years before Fourier, but you don’t actually need Fourier’s math to apply the principle by hand.

The hypothesis here is that Leonardo effectively painted portions of the canvas in two versions, rendering one version of the image as it is seen through what amounts to a low-pass filter and another version as seen relatively normally. The low-frequency version is what is rendered in “sfumato” which is Italian for smoke.

The smoky version can be isolated by the because, as discussed above, squinting acts as a low-pass filter. There isn’t an obvious analogous way to automatically isolate the high-frequency components of artist’s view, but normal vision supplies all the information to paint the more finely detailed normal view.

It’s not necessary a viewer to squint in order to perceive the shifting effect of seeing a varying blend of high and lower frequency components. The eye itself can do it because the resolution of the retina varies a great deal. The macula region of the retina, in particular the region within the macula known as the fovea centralis, has much higher resolution than most of the retina and is therefore more sensitive to high frequency detail than the rest of the retina. The viewer looking directly at her face sees with maximum resolution, and therefore favors the fine detail, while the face as seen with the retinal area outside the macula, in fact, even simply outside the fovea centralis, will show less of the detail and tend to emphasize the low-frequency version of the image. Therefore, the viewer’s eyes moving over the image subtly shifts what they see in her facial expression.

That different parts of the eye see differently is well known. For another example, if you want to see movement in the dark, don’t stare at where you think it is. Look near where ou think it is, and pay attention to what’s not in the center of your vision. These outer areas of the retina have lower resolution, but are much more sensitive in the dark.

The genius is less in Leonardo’s manual technique (although his manual skills were unsurpassed) than in his uncanny intuition of why it would produce the magic effect having the picture subtly morph back and forth, producing in the eye a continuum of images, depending on how the observer looked at it.

Dissecting the Mona Lisa Smile

It is one of the miracles of our age that you don’t need to be able to program a computer, or indeed to know a very much about math, let alone Fourier analysis, to use advanced math as a tool. You can literally just tell the computer what you’re trying to do and it will code it up for you.

And thank God. I haven’t done a lot of this kind of programming in a while, so I would have had to mess around half the night figuring out how to get this program working. It’s not a complex program, but you need to know all the painful details of what libraries the math routines are in, what functions take what arguments, how to apply them, etc. It adds up to a chore I’d probably never get around to on my own.

Therefore, I asked the AI Claude Sonnet 4.5 to whip up a Python program to break down an image of La Giaconda’s face, and apply high pass and low pass filters to it so we could see if this is indeed what Leonardo did. It only took about couple of paragraphs of typing to get Sonnet to write the program, and a little more back and forth to get it to run it for me. I had the first computational results within an hour of deciding to try it.

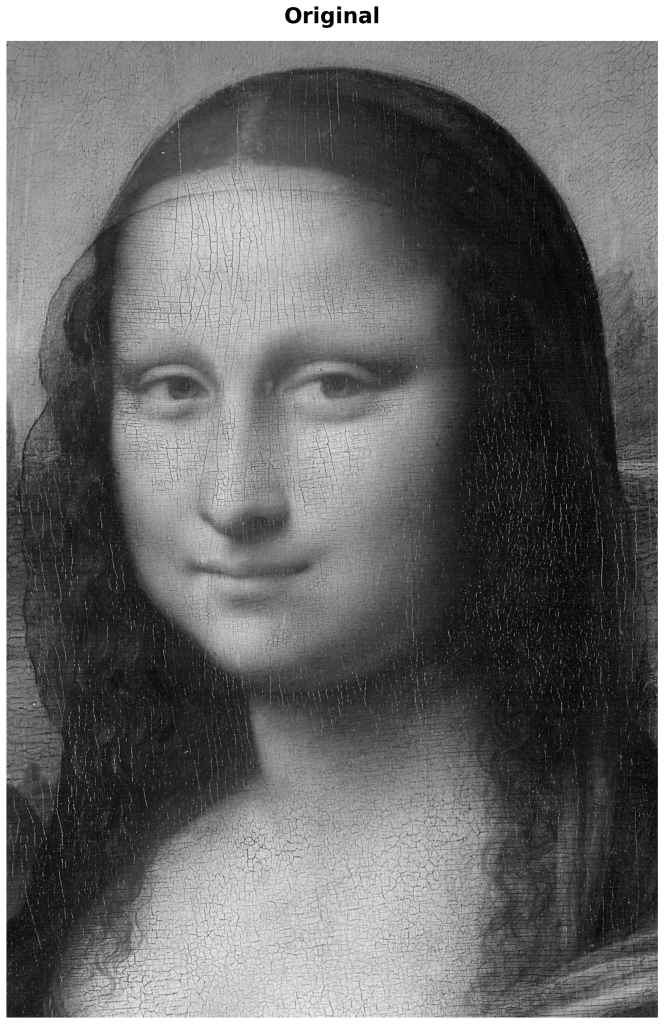

The photo centered below is the original rendered in black and white.

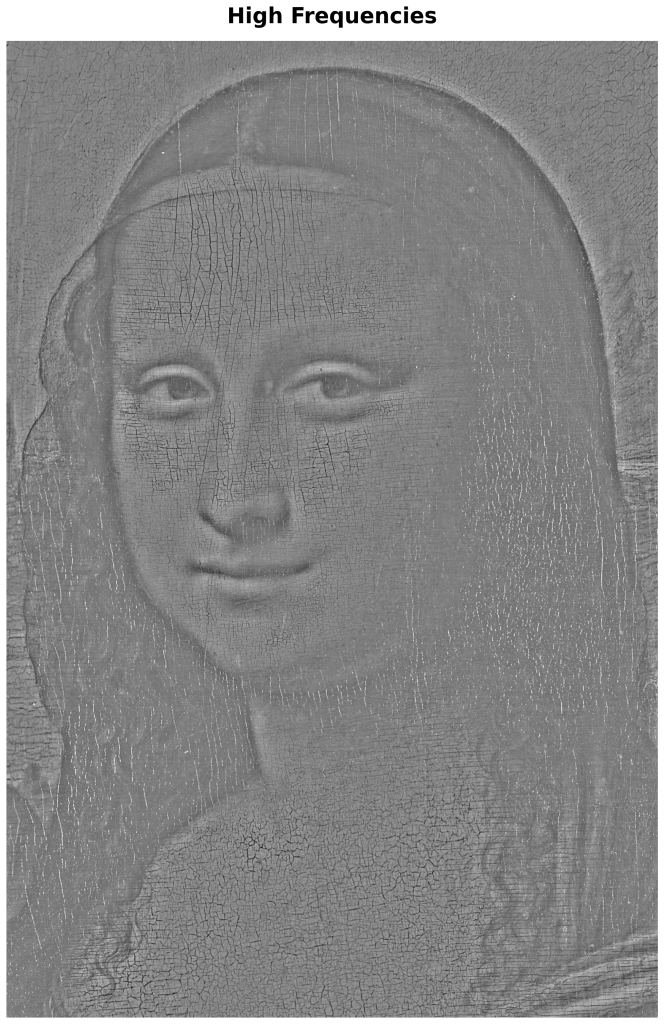

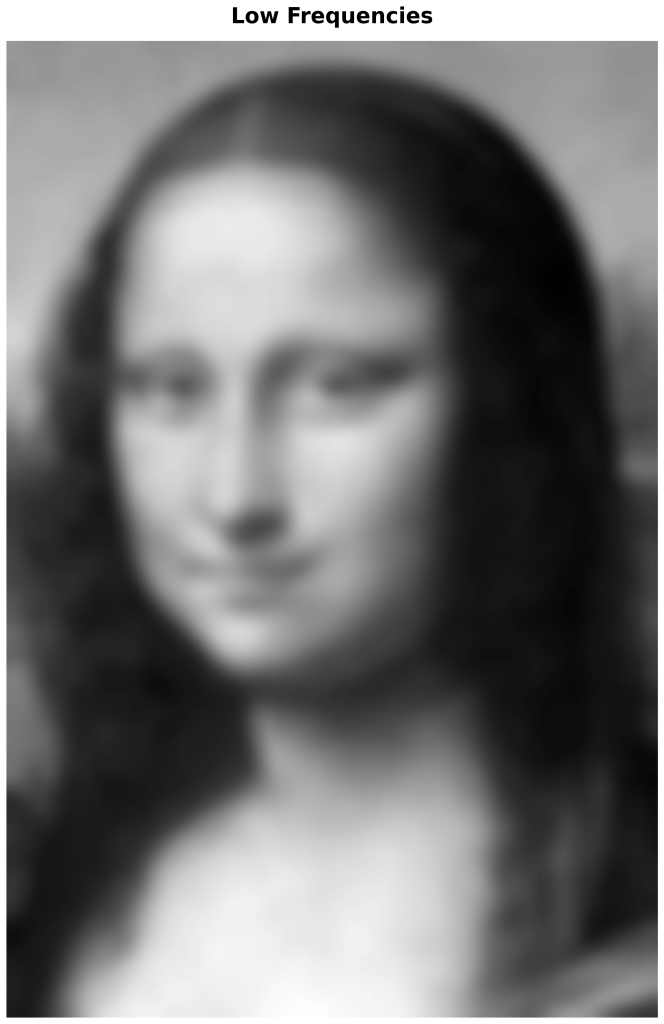

Below are the low-pass and high-pass versions. The high-pass version is almost outlines, because it shows where the rapid changes in the image occur. At the risk of belaboring the point, the low-pass version is blurry because it removes all the abrupt changes that you see in the high-pass version.

The original photo was cropped from a high resolution image courtesy of the Creative Commons File:Mona Lisa, by Leonardo da Vinci, from C2RMF.jpg.

The spoiler is, yes, the facial expression in the low-pass and the high-pass versions are strikingly different in coherent ways. I found that the more I looked, the more apparent the differences are.

Below, we look systematically at some of the differences in the components of the facial expressions.

We’re not going to try to get into why Leonardo chose the particular expressions he used for each version. What matters is that the expressions are systematically different across the two frequency ranges, and that the details group coherently in the two versions. In other words, the components of the facial expressions in each version make sense together.

The technical specifics on facial mechanics cited here come out of the Facial Action Coding System (FACS) which is a standard reference for the meaning of facial expressions and the muscle actions that produce them.

Eyebrows

The eyebrows in the low-pass version are in a classic configuration produced by the corrugator supercilii muscle, which pulls the brows downward and inward, combined with the frontalis muscle which pulls the inner part of the brows upward toward the hairline. This expression is described in detail in Action Unit 1 (AU1) of the FACS.

This motion of the eyebrows tends to show distress, sadness, haplessness, and vulnerability and is referred to in the context of illustration or animation as the puppy dog brow. It is ubiquitous in contemporary Disney animation, where it is used to convey adorable haplessness, particularly when the main characters are charming each other.

In contrast, the pronounced puppy dog brow is hardly visible at all in the high frequency version of the image, and in both the original and the high frequency version slightly arched eyebrows can be seen.

Mouth

In the low frequency version we see a pronounced display of expression AU-12, which is pulling up the corners of the mouth by the zygomaticus major muscles but without enough force to lift the cheeks or produce a broad grin. For most of its width the line between the lips is quite flat. We only see a slight curling upward at the corners producing a faint, almost watery smile. You can try this yourself. Just allow the muscles that pull up on the corners of the mouth to contract a little, so you get the corners curling up without significantly affecting the cheeks.

This tends to express bittersweet emotion or feelings of sad affection, resignation, and compassion mixed with pain. It might be seen in a person accepting loss, expressing love they don’t expect to be returned, etc. Its pairing with AU1 in the low frequency image is highly consistent.

Note that in the high frequency picture, the corners of the mouth hardly turn up at all, but the entire mouth forms a gentle banana-like curve, giving a more natural pleased smile. This is consistent with the depiction of the eyebrows in the high frequency image.

Eyes

A genuine smile of pleasure shows in the bunching of the cheeks as well as in the shape of the mouth, but it is always accompanied by a very distinctive contracting of the orbicularis oculi and pars orbitalis muscles as in the AU6 expression.

The AU6 action raises the cheeks, compresses the skin under the eyes and produces a bulging under the lower eyelids. It also produces crows feet, particularly in a mature person. This kind of smile accompanies positive feelings and communicates the joy of a smiling person through the eyes even if the rest of the face is hidden. It is the hallmark of what is known as “the Duchenne smile.” It is a positive, genuine and emotionally uncomplicated smile involving the whole face.

Note how much more pronounced is this collection of effects in the high frequency version and how relatively absent they are in the low-pass version.

The appearance of these features in the low-pass and high-pass images is very consistent with the respective eyebrow and mouth depictions.

Taken Together

Overall, the combination of eyebrows, eyes, and smile form two tight patterns of strikingly differing expressions.

The expression that comes through in the lower frequencies is somewhat wounded, tender, sad, and vulnerable, while the expression that comes through in with the higher frequencies is confident and strikingly happier, smiling but not grinning, with the pronounced bunching under the eyes that is characteristic the Duchenne smile.

Note also the rounded appearance of the cheeks in the higher frequency version. This is another facial expression that is typical of joy or pleasure. In contrast, there is no bunching of the cheeks in the low frequency version, where the lady appears actually appears to be almost gaunt.

Leonardo is infinitely more subtle than the author of the hamburger Hitler picture, but a glance back at the hamburger picture reminds us of the power of selectively seeing high and low frequencies in imagery.

It is absolutely extraordinary that Leonardo was able to produce this effect by hand, essentially painting two different versions of the same face in different frequency registers. It’s an astounding feat of observation and an remarkable intellectual feat.

Directions

The difference in facial expressions between high-pass and low-pass versions are strong, and each version is a coherent clustering. There’s nothing random looking about it.

The astonishing thing is that Leonardo was able to imagine this effect without any mathematical background suggesting why it might work. It suggests a power of observation that is almost superhuman.

This simple mathematical illustration above uses only a single arbitrarily chosen setting for the high v low frequency division. The approach could be taken farther. It would be an interesting exercise to animate the division point, i.e., to allow a knob to be turned to adjust the proportion of high v low on the fly. In this way you could produce, on demand, the shifts that people looking at the picture are so entranced by. Turn the knob one way and see the more joyful lady. Turn it the other and see the fragile, perhaps wounded, lady.

Another factor that is not explored here is that the painting is in color. In principle, the manipulation of frequency registers could be different across the spectrum. But that’s is not a blog post; with that degree of subtlety, one would be getting into dissertation territory.

Readers are encouraged to explore the FACS to find more detail. The FACS describes every static facial expression humans are capable of making in terms of the specific muscles that move and the emotional states they betoken. It is a remarkable document.

One useful tip: this kind of exploration of the mechanics of expression can be very effectively facilitated by AI. If you describe the facial movements verbally, the LLM will quickly zero in on the corresponding technical descriptions in the FACS.

Trying It Out For Yourself

I did not write the code by hand. I described what I wanted to Claude Sonnet 4.5, which generated the Python program below in a couple of minutes.

Anybody who knows even a little Python can make this work on any computer that has Python installed. Four lines down is where the program reads in the image. All you’d have to do is substitute a location for the file on your own machine. This is just a toy program–one could easily enhance it for better usability.

However, if you just want to fiddle around, or lack the skills to run it manually, you can simply ask Sonnet (or probably GPT) to do it for you. I did not execute these runs myself. Sonnet ran the program for me. I only downloaded the code in order to include it here.

Since this version is known to work, you can upload it to ensure that you get comparable results, or you can just get Sonnet to write it from scratch. The code here produces six versions. If you want only the three versions I’ve used here, Sonnet will gladly modify it to suit.

Note that the first three lines import the libraries for doing the Fourier transforms, executing miscellaneous math tasks, and laying out the result. No real math is shown in the program as given–inside those libraries is where all of the real action is.

The code you see is essentially boiler plate except for the hard-coded values. A more sophisticated exploration would include a way to tune those constants in various ways, including adjusting the dividing lines between high and low, etc.

As mentioned above, for understanding the math, 3blue1brown can’t be beat. If you want to understand the DFT at an algorithmic level, there are many online references that will explain it and show you implementations in the language of your choice.

The Python Code

It is not a great deal of code.

import cv2

import numpy as np

import matplotlib.pyplot as plt

img = cv2.imread(‘/mnt/user-data/uploads/Mona_Lisa__by_Leonardo_da_Vinci__from_C2RMF_closeup.jpg’)

img_rgb = cv2.cvtColor(img, cv2.COLOR_BGR2RGB)

gray = cv2.cvtColor(img, cv2.COLOR_BGR2GRAY)

print(f”Image size: {gray.shape}”)

low_freq = cv2.GaussianBlur(gray, (71, 71), 20)

high_freq = gray.astype(float) – low_freq.astype(float)

high_freq = high_freq + 128 # Shift to middle gray

high_freq = np.clip(high_freq, 0, 255).astype(np.uint8)

very_low_freq = cv2.GaussianBlur(gray, (151, 151), 50)

fig, axes = plt.subplots(2, 3, figsize=(16, 11))

axes[0, 0].imshow(img_rgb)

axes[0, 0].set_title(‘Original\n(All frequencies)’, fontsize=13, fontweight=’bold’)

axes[0, 0].axis(‘off’)

axes[0, 1].imshow(low_freq, cmap=’gray’)

axes[0, 1].set_title(‘Low Frequencies\n(Far away / squinting view)’, fontsize=13, fontweight=’bold’)

axes[0, 1].axis(‘off’)

axes[0, 2].imshow(high_freq, cmap=’gray’)

axes[0, 2].set_title(‘High Frequencies\n(Fine details / sfumato edges)’, fontsize=13, fontweight=’bold’)

axes[0, 2].axis(‘off’)

axes[1, 0].imshow(very_low_freq, cmap=’gray’)

axes[1, 0].set_title(‘Very Low Frequencies\n(Extreme distance)’, fontsize=13, fontweight=’bold’)

axes[1, 0].axis(‘off’)

axes[1, 1].imshow(low_freq, cmap=’gray’)

axes[1, 1].set_title(‘What You See Squinting\n(Does the smile change?)’, fontsize=13, fontweight=’bold’)

axes[1, 1].axis(‘off’)

axes[1, 2].imshow(gray, cmap=’gray’)

axes[1, 2].set_title(‘Close-Up Detail\n(Full resolution)’, fontsize=13, fontweight=’bold’)

axes[1, 2].axis(‘off’)

plt.suptitle(‘Mona Lisa Face – Spatial Frequency Analysis\nDoes the expression change with viewing distance?’,

fontsize=15, fontweight=’bold’, y=0.98)

plt.tight_layout()

plt.savefig(‘/home/claude/mona_face_analysis.png’, dpi=150, bbox_inches=’tight’)

print(“Face analysis complete!”)

fig2, axes2 = plt.subplots(2, 2, figsize=(13, 13))

axes2[0, 0].imshow(img_rgb)

axes2[0, 0].set_title(‘Original – Full Detail’, fontsize=14, fontweight=’bold’)

axes2[0, 0].axis(‘off’)

axes2[0, 1].imshow(low_freq, cmap=’gray’)

axes2[0, 1].set_title(‘Blurred (Low Freq)\nSmiling more?’, fontsize=14, fontweight=’bold’)

axes2[0, 1].axis(‘off’)

axes2[1, 0].imshow(high_freq, cmap=’gray’)

axes2[1, 0].set_title(‘Details Only (High Freq)\nDifferent expression?’, fontsize=14, fontweight=’bold’)

axes2[1, 0].axis(‘off’)

medium_blur = cv2.GaussianBlur(gray, (101, 101), 30)

axes2[1, 1].imshow(medium_blur, cmap=’gray’)

axes2[1, 1].set_title(‘Medium Blur\nMid-distance view’, fontsize=14, fontweight=’bold’)

axes2[1, 1].axis(‘off’)

plt.suptitle(‘Expression Comparison: Does It Change Between Frequency Bands?’,

fontsize=14, fontweight=’bold’)

plt.tight_layout()

plt.savefig(‘/home/claude/mona_smile_comparison.png’, dpi=150, bbox_inches=’tight’)

print(“Smile comparison saved!”)

cv2.imwrite(‘/home/claude/mona_face_low.png’, low_freq)

cv2.imwrite(‘/home/claude/mona_face_high.png’, high_freq)

cv2.imwrite(‘/home/claude/mona_face_very_low.png’, very_low_freq)

print(“Individual components saved!”)